Table of contents

Open Table of contents

Let’s cut through the noise

Right, let’s be honest about GitHub Copilot agents. After spending a fair bit of time with them since they were launched (19th May 2025, so this month!), I can tell you they’re genuinely useful – but only if you know when and how to use them properly. I’ve never truly believed that we’re close to the “AI will replace developers” rhetoric; at least not yet. These are tools, and like any tool, they’re only as good as the person using them.

I’ve been working with AI-assisted development tools since the early days of Microsoft’s cognitive services, and I can say with confidence that what we have now with Github Copilot agents is an interesting step forward, or at least a decent jump in the right direction. That said, there’s a lot of marketing waffle out there, so let me talk candidly on getting set up and actually making these things work for you.

Setting up GitHub Copilot (The actual process)

For individuals

Getting started as an individual is relatively straightforward, which is more than I can say for most enterprise software these days.

Step 1: Choose your poison

- Free tier: Good for dipping your toes in, but limited features

- Pro ($10/month): This is where things get interesting - unlimited completions, access to multiple models

- Pro+ ($39/month): All the bells and whistles, including the preview models that are mostly worth having (although at the time of writing I’ve been hitting some issues with the google gemini models being used as agents)

My honest recommendation? Start with Pro. The free tier is fine for a quick look, but you’ll hit limitations fast if you’re doing any serious development work.

Step 2: Sign up

Head to GitHub Copilot and follow the setup flow. It’s genuinely that simple. GitHub’s done a decent job of not over-complicating this bit.

Step 3: Install the VS Code extension

You’ll want the main GitHub Copilot extension and the GitHub Copilot Chat extension. Don’t skip the chat one – that’s where the agent magic happens.

For organisations

The setup is a little bit more involved for organisations, but thankfully GitHub’s documentation is actually fairly helpful here.

Organisation setup:

- Admin permissions: You’ll need organisation admin rights to set this up

- Billing: Sort out who’s paying and how many seats you need

- Policy configuration: Decide on usage policies, data retention, etc.

The key thing for organisations is the policy configuration. You can control whether code suggestions can be made based on public code, whether telemetry is collected, and how data is handled. This matters more than you might think, especially if you’re working with sensitive codebases.

For the detailed organisation setup process, GitHub’s official documentation is actually worth reading. They’ve done a decent job of explaining the enterprise features without drowning you in corporate jargon.

Getting agents working in VS Code

Once you’ve got Copilot installed, the fun begins. Agent mode is where this tool transforms from “helpful autocomplete” to a decent facsimile of an “AI pair programmer”.

Activating agent mode:

In VS Code, open the Copilot chat (Ctrl+Shift+I or Cmd+Shift+I) and type @workspace followed by your request. That @workspace bit is crucial – it tells Copilot to consider your entire project context.

Here’s a practical example:

@workspace Can you help me refactor this authentication service to use dependency injection?The agent will analyse your codebase, understand the existing patterns, and suggest changes across multiple files. It’s genuinely impressive when it works well.

The models that actually matter

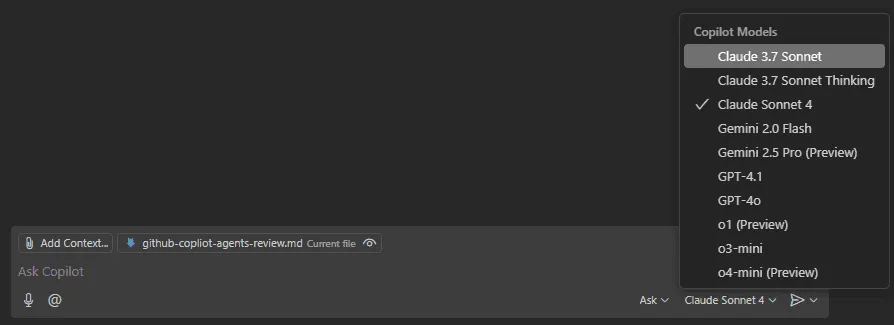

One of the best bits about the Pro (and Pro+) tier is access to different models. Here’s my honest take on what’s actually useful:

Claude Sonnet 4: Brilliant for code reasoning and explanation. If you’re working with complex logic, this worked out very well when I was testing it out.

GPT-4o: Still solid for general coding tasks. Good balance of speed and capability.

o1-preview models: These were solid, stable, and generally reliable for most tasks. They handle a wide range of coding problems well. The outputs were always coherent and relevant, making them a good choice for most coding tasks.

Gemini 2.5 Pro: Fast and surprisingly good for quick refactoring tasks, however I ran into a number of issues with this model when I was testing it out, so I wouldn’t recommend it just yet.

You can switch between models using the dropdown in the chat interface. Don’t just stick with the default – experiment and find what works best for your use cases.

The #codebase tag - your new best friend

Here’s something that’s not immediately obvious but makes a massive difference: use #codebase in your prompts when you want Copilot to consider your entire solution.

Instead of:

How do I implement caching in this API?Try:

#codebase How should I implement caching in this API, considering the existing architecture and patterns?The difference in response quality is night and day. Copilot will actually look at your existing code patterns, understand your architectural choices, and suggest implementations that fit properly rather than giving you generic Stack Overflow answers.

When to actually use these tools

This is where I see a lot of developers going wrong. They either try to use Copilot for everything or dismiss it entirely. The truth is somewhere in the middle.

Where agents excel:

- Boilerplate generation: Creating DTOs, basic CRUD operations, test scaffolding

- Code exploration: Understanding unfamiliar codebases, explaining complex algorithms

- Refactoring: Suggesting improvements while maintaining existing patterns

- Documentation: Generating inline comments and README updates

- Bug hunting: Spotting obvious issues and suggesting fixes

Where they’re less helpful:

- Business logic: You still need to understand the domain and requirements

- Architecture decisions: These need human judgment and context

- Performance optimisation: Requires understanding of the specific performance characteristics

- Security implementation: Too important to delegate to an AI that might miss edge cases

My practical workflow:

- Start coding the tricky bits yourself – the business logic, the core algorithms

- Use agents for the repetitive stuff – interfaces, basic implementations, tests

- Let agents help with exploration – “What does this function do?”, “How is authentication handled here?”

- Use them for rubber duck debugging – explaining your problem often helps you solve it

The reality check

Let me try to be very honest: GitHub Copilot agents aren’t going to make you a better developer overnight. They’re not going to solve architectural problems you don’t understand, and they’re definitely not going to replace the need to actually learn how to code.

What they will do is speed up the mundane stuff and help you explore code more efficiently. I find myself using them most when:

- Working with unfamiliar codebases (the

#codebasetag is brilliant for this) - Generating test cases (they’re surprisingly good at thinking of edge cases)

- Explaining legacy code (better than spending hours reading through uncommented functions)

- Quick prototyping (getting to a working state faster, then refining)

The key is treating them as a very smart junior developer who knows the syntax but needs guidance on the business logic and architectural decisions.

Bringing it all together

GitHub Copilot agents are a genuinely useful addition to the developer toolkit. They’re not revolutionary in the “everything changes now” sense, but they’re evolutionary in the “this makes my day-to-day work noticeably better” sense.

The setup process is straightforward enough that there’s no excuse not to try them. Start with the Pro tier, spend some time learning the different models and when to use them, and don’t forget about the #codebase tag.

Most importantly, keep your expectations realistic. These tools are brilliant assistants, but they’re not replacements for understanding your domain, thinking through problems properly, and writing code that you can stand behind.

If you’re on the fence about trying them, my advice is simple: give it a month. Use them for the boring stuff, let them help with exploration, but keep doing the thinking yourself. You might be surprised at how much time you save on the tedious bits, leaving more mental energy for the problems that actually need solving.

And if you find yourself becoming overly dependent on them for basic coding tasks, that’s your cue to step back and make sure you’re still keeping your core skills sharp. These tools should amplify your abilities, not replace them.

Right, that’s enough waffling from me, I genuinely think you need to give these a try and use them for what they are good at. If you have any questions or want to share your experiences, feel free to reach out. Happy coding!